Live Improvisation with Fine-Tuned Generative AI

A Musical Metacreation Approach

Research Overview

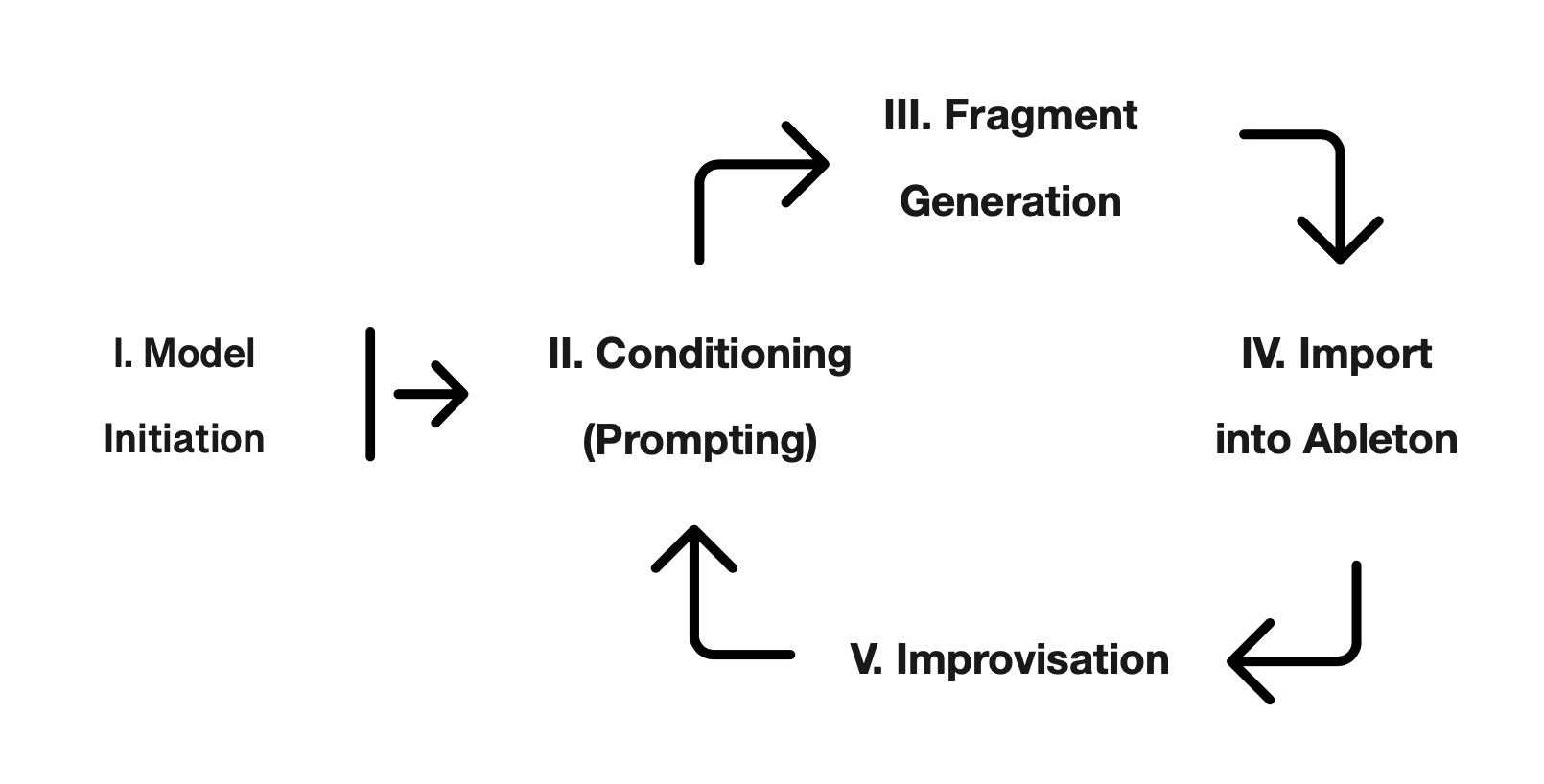

Improvisation Pipeline

Complete workflow integrating text prompts, AI model inference, and Ableton Live for dynamic musical co-creation.

Fine-Tuning Dataset

Custom dataset of compositions and field recordings used to fine-tune Stable Audio Open for personalized sound generation.

Inference Performance

Real-time performance analysis showing latency and generation times critical for live improvisation workflows.

Abstract

This paper presents a pipeline to integrate a fine-tuned open-source text-to-audio latent diffusion model into a workflow with Ableton Live for the improvisation of contemporary electronic music. The system generates audio fragments based on text prompts provided in real time by the performer, enabling dynamic interaction. Guided by Musical Metacreation as a framework, this case study reframes generative AI as a co-creative agent rather than a mere style imitator. By fine-tuning Stable Audio Open on a dataset of the first author's compositions and field recordings, this approach demonstrates the ethical and practical benefits of open-source solutions. Beyond showcasing the model's creative potential, this study highlights the model's significant challenges and the need for democratized tools with real-world applications.

Audio Examples

Improvisation 1

Improvisation 2

Improvisation 3

Future Directions

Our case study shows GenAI can extend musical creativity beyond mere imitation. Rapid, responsive sound generation enables AI to catalyze new ideas and open fresh improvisational pathways. Yet despite this potential, high computational and data demands may hinder broader adoption. Future work will include evaluations with audiences and artists to assess artistic impact and guide improvements. Additionally, fine- tuning more effectively with smaller datasets can reduce data needs and expand accessibility. Developing optimized pipelines that run on local hardware with limited resources will also be crucial. As this technology evolves and opens new possibilities for Musical Metacreation, our research aims to lay the groundwork for deeper human-AI collaboration in live improvisation.