Fragmenta

An All-in-One Pipeline for Training and Using Text-to-Audio Models

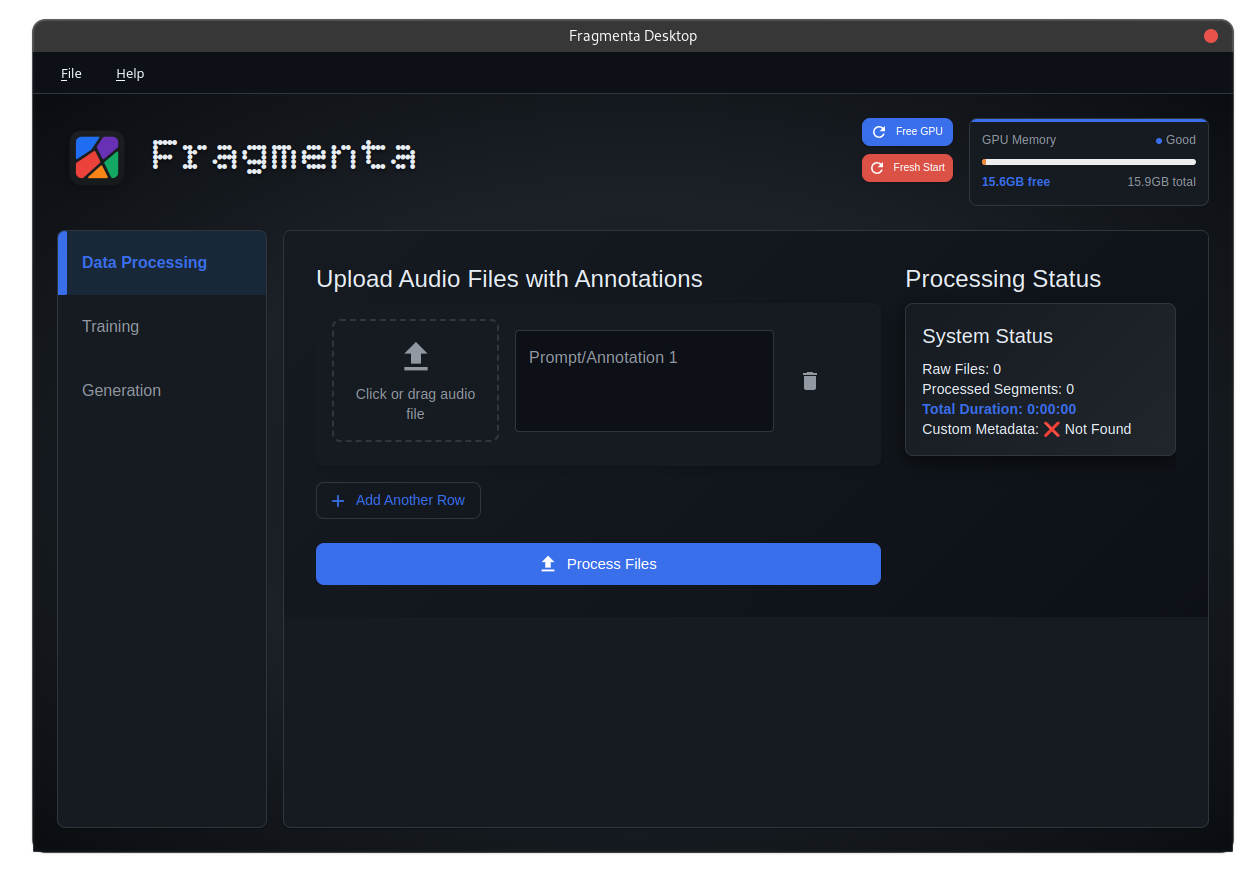

User Friendly Interface

A clean, intuitive interface designed for ease of use.

Why?

With AI seemingly everywhere, most tools are either locked behind subscription models designed for consumers, or they require deep technical knowledge, coding skills, and a huge time investment to use effectively. At the same time, the technology's narrative is being driven by big tech, turning access into a commodity. These technofeudal structures limit who gets to shape the future of AI and how it can be used creatively.

There also exist the ethical problems. Much of today’s AI is trained on vast amounts of data scraped from the internet, without permission, often infringing on intellectual property rights. Built on open research from Stability AI, Fragmenta shows that ethically trained, personalized models can empower musicians and audio creators, without infringing copyrights or compromising artistic integrity.

Participating in how the narative of technology is being shaped can be a form of democratic intervention. Fragmenta exists to enable artists to train models on their own work, have a transparent understanding of their AI carbon footprint and use AI on their own terms rather than as a product. And most importantly, all of this stays local. Your music or recordings never leave your device.

Three Modules

Data Processing

Add your audio files and let Fragmenta handle the dataset creation:

- Drag & drop audio file upload

- Annotation field for tagging

- Automatic dataset creation

- Automated metadata creation

Model Training

Fine-tune text-to-audio models with advanced configuration options:

- Stable Audio Open integration

- Custom training parameters

- Real-time loss monitoring

- One-click checkpoint unwrap

Audio Generation

Generate 44.1kHz stereo audio from text prompts using trained or base models:

- Ready to use even without fine-tuning

- Multiple model support

- Configurable duration

- Instant download

Real-time Monitoring

Training Monitor

LiveImportant Information

System Requirements

Prerequisites

IMPORTANT: Fragmenta is built on open‑source research and models. It currently uses open‑source diffusion models from Stability AI, with support for additional architectures planned in the future. Fragmenta is intended for experimental music and does not create realistic audio. The project is in early development and not intended for production use. The first beta will be released soon and will be free to use. Feedback from early users will help shape its future development.

All models used in Fragmenta are subject to the terms and conditions of their respective licenses. By using Fragmenta—especially for commercial purposes—you agree to comply with these licensing terms. The creator of Fragmenta assumes no responsibility for how the software or models are used. Users are solely responsible for ensuring their own compliance with applicable laws and licenses.